Modern websites present significant hurdles for data extraction. Developers constantly face dynamic JavaScript-heavy pages, aggressive anti-bot measures like CAPTCHAs, and frequent IP blocks. Basic tutorials often fall short, failing to provide solutions for these real-world challenges. This leaves developers spending more time troubleshooting access issues than extracting valuable data. The core problem is finding reliable, up-to-date Python web scraping examples that are both practical and resilient enough to handle these complexities.

This guide moves beyond theoretical concepts to offer battle-tested, copy-pasteable solutions for a diverse set of targets, from e-commerce giants like Amazon to educational platforms like Udemy. Each example includes a detailed breakdown of the strategy, the full Python code, and an explanation of the results. You will learn not just how to write a scraper but how to build a robust data extraction pipeline. We will explore various approaches, from using libraries like Scrapy to leveraging a powerful, API-driven method with Olostep for guaranteed results on complex sites.

Beyond e-commerce and learning platforms, web scraping is a vital tool for business strategy, particularly in gathering competitor intelligence. By analyzing rivals' pricing, product catalogs, and marketing tactics, businesses can make more informed decisions. The techniques in this article provide the foundation for building these powerful analytical tools.

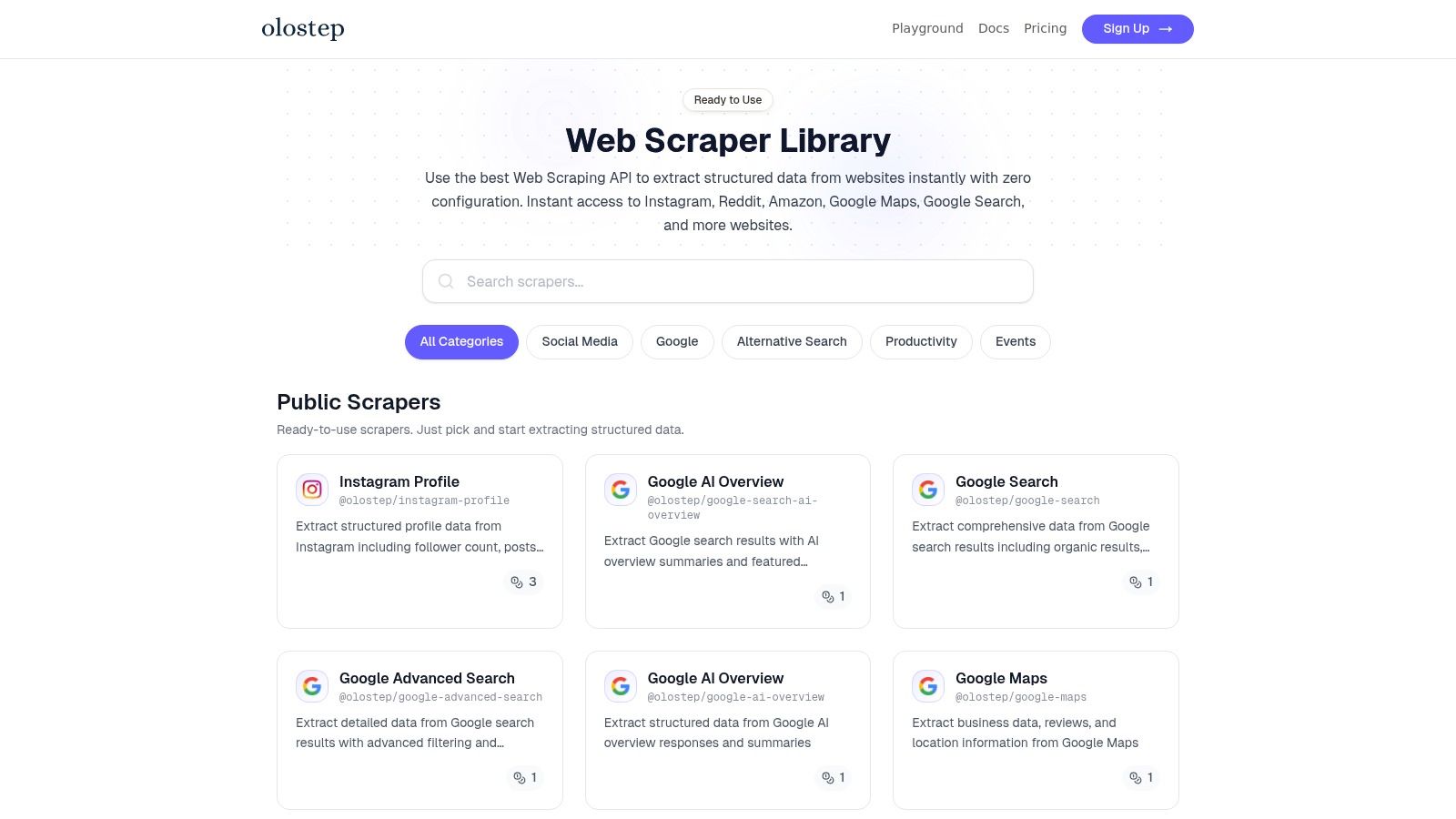

1. Olostep Scrapers

Olostep Scrapers emerge as a premier solution for developers and data scientists seeking a robust, scalable, and versatile web scraping infrastructure. It is engineered to handle enterprise-level demands, supporting up to 100,000 simultaneous requests. This makes it an ideal engine for high-throughput tasks like real-time price monitoring, large-scale market research, and populating AI training datasets. The platform’s strength lies in its ability to deliver clean, structured data with exceptional speed and reliability, abstracting away the complexities of anti-bot measures and proxy management.

What sets Olostep apart is its unique combination of raw power and flexibility. While many services lock users into a specific output, Olostep supports multiple formats, including structured JSON, Markdown, HTML, and even PDF. This versatility ensures that the extracted data can be seamlessly integrated into diverse workflows, from developer pipelines to business intelligence dashboards.

Strategic Advantages and Use Cases

Olostep is more than just a data extraction tool; it's a community-driven ecosystem. A standout feature is its support for custom parsers, allowing developers to build, share, and leverage community-created logic to extract precisely the data they need from complex or niche websites. This collaborative approach significantly reduces development time and enhances the platform's adaptability to new challenges.

- High-Volume Data Aggregation: Perfect for AI startups and data scientists needing to gather massive datasets from multiple sources without worrying about rate limits or IP blocks.

- Competitive Intelligence: Businesses can track competitor pricing, product catalogs, and market sentiment in real-time, enabling agile strategic decisions.

- Content Syndication: Developers can effortlessly pull content from various web pages and reformat it for different platforms using the Markdown or HTML output options.

Practical Python Example: Scraping with Olostep's API

Integrating Olostep into a Python project is straightforward using the requests library. There is no complex SDK to install. Here is a practical, copy-pasteable python web scraping example that fetches the content of a target URL and returns it in structured JSON format, including robust error handling and retries.

import requests

import json

import time

import os

API_KEY = os.environ.get("OLOSTEP_API_KEY", "YOUR_OLOSTEP_API_KEY")

# API endpoint

url = "https://api.olostep.com/v1/scrapes"

# Request payload

payload = {

"url": "https://quotes.toscrape.com/",

"output_format": "json" # Can be 'html', 'markdown', or 'pdf'

}

# Request headers

headers = {

"x-olostep-api-key": API_KEY,

"Content-Type": "application/json"

}

# Retry logic parameters

max_retries = 3

backoff_factor = 2

for attempt in range(max_retries):

try:

# Make the POST request to the Olostep API

response = requests.post(url, headers=headers, json=payload, timeout=30)

# Raise an HTTPError for bad responses (4xx or 5xx)

response.raise_for_status()

# Print the structured JSON output on success

scraped_data = response.json()

print("--- Full Response Payload ---")

print(json.dumps(scraped_data, indent=2))

# Example of accessing specific data

if scraped_data.get("data"):

print("\n--- Extracted Quotes ---")

for item in scraped_data["data"]["quotes"]:

print(f"'{item['text']}' - {item['author']['name']}")

break # Exit loop if request was successful

except requests.exceptions.HTTPError as err:

print(f"HTTP Error on attempt {attempt + 1}/{max_retries}: {err}")

print(f"Response Body: {err.response.text}")

if err.response.status_code < 500:

# For 4xx errors, retrying is unlikely to help

break

# For 5xx server errors, wait and retry

time.sleep(backoff_factor ** attempt)

except requests.exceptions.RequestException as e:

print(f"An error occurred on attempt {attempt + 1}/{max_retries}: {e}")

time.sleep(backoff_factor ** attempt)

else:

print("Failed to retrieve data after multiple retries.")Example Response Payload:

{

"status": "success",

"data": {

"quotes": [

{

"text": "The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.",

"author": {

"name": "Albert Einstein",

"url": "/author/Albert-Einstein"

},

"tags": [

"change",

"deep-thoughts",

"thinking",

"world"

]

}

]

},

"metadata": {

"url": "https://quotes.toscrape.com/",

"statusCode": 200,

"timestamp": "2025-06-15T12:00:00.000Z"

}

}Getting Started

Olostep provides a generous free tier of 500 credits, allowing developers to thoroughly test the service's capabilities without any initial investment. Pricing is transparent and scales with usage, making it accessible for both startups and established enterprises. As one of the more comprehensive scraping tools available, Olostep is a top-tier choice for any serious data extraction project.

Website: https://www.olostep.com/scrapers

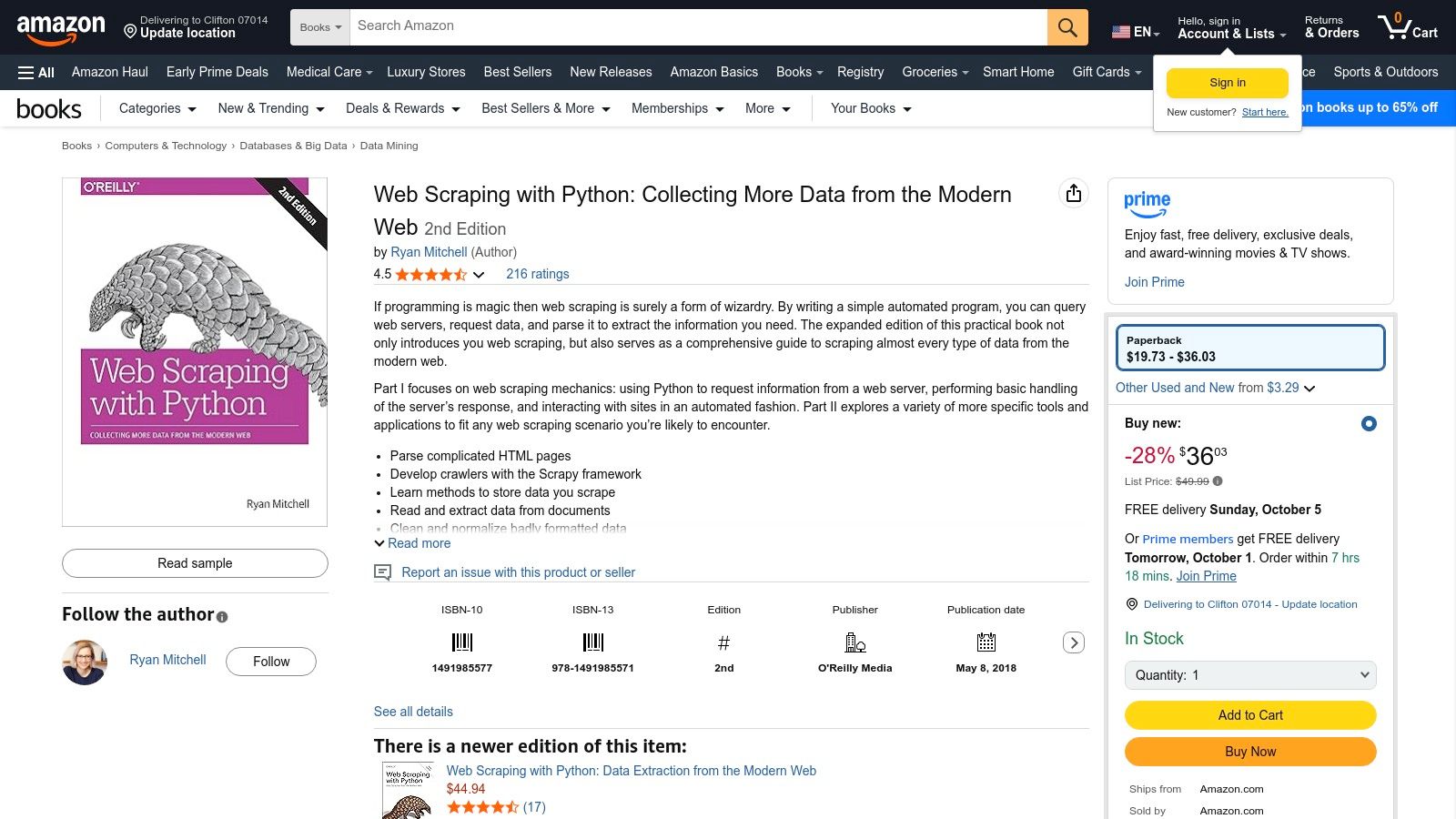

2. Amazon

Amazon serves as an essential, real-world target for developing robust web scraping skills. Its complex, JavaScript-heavy structure and dynamic content make it a challenging yet rewarding platform for one of the most practical python web scraping examples: extracting product data. Scraping Amazon allows developers to practice handling CAPTCHAs, managing session cookies, and parsing intricate HTML layouts, all of which are common hurdles in advanced web scraping projects.

This example focuses on extracting key details for a specific book, such as its title, price, and author. Unlike simpler static sites, Amazon's layout requires precise element selection. For instance, the main price is often split across multiple <span> elements within a div, a common technique to deter basic scraping bots. Successfully navigating this complexity is a key milestone for any data scientist or developer.

Example: Scraping Book Details with Olostep

A direct requests.get() call to an Amazon URL will likely be blocked or served a simplified page without the necessary data. Using a professional scraping API like Olostep handles the underlying anti-bot challenges, including proxy rotation and header management. This allows you to focus purely on data extraction logic. Here is how you can use Python to scrape the "Web Scraping with Python" book page:

import requests

import json

import os

from bs4 import BeautifulSoup

# Set your Olostep API key from environment variables for security

API_KEY = os.environ.get("OLOSTEP_API_KEY", "YOUR_OLOSTEP_API_KEY")

# The Amazon product URL to scrape

target_url = "https://www.amazon.com/Web-Scraping-Python-Collecting-Modern/dp/1491985577"

# Define the payload for the Olostep API

payload = {

"url": target_url,

"output_format": "html",

"options": {

"waitFor": 2000 # Wait 2 seconds for dynamic content to load

}

}

# Set the required headers for the API request

headers = {

"x-olostep-api-key": API_KEY,

"Content-Type": "application/json"

}

try:

# Make the POST request to the Olostep API endpoint

response = requests.post("https://api.olostep.com/v1/scrapes", headers=headers, json=payload, timeout=60)

response.raise_for_status()

# Parse the JSON response to get the HTML content

data = response.json()

html_content = data.get("content")

if not html_content:

print("Failed to retrieve HTML content.")

else:

# Use BeautifulSoup to parse the returned HTML

soup = BeautifulSoup(html_content, "html.parser")

# Extract specific book details using stable CSS selectors

title = soup.select_one("#productTitle")

author = soup.select_one(".author .a-link-normal")

price = soup.select_one(".a-price .a-offscreen")

print("--- Scraped Amazon Product Data ---")

print(f"Title: {title.get_text(strip=True) if title else 'Not found'}")

print(f"Author: {author.get_text(strip=True) if author else 'Not found'}")

print(f"Price: {price.get_text(strip=True) if price else 'Not found'}")

except requests.exceptions.HTTPError as err:

print(f"HTTP Error: {err}")

print(f"Response Body: {err.response.text}")

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")Strategic Takeaways

- Anti-Bot Circumvention: Amazon employs sophisticated anti-bot systems. The

waitForoption in the Olostep payload is crucial, as it allows time for client-side JavaScript to render the final HTML, ensuring data like pricing and reviews are present. - Precise Element Selection: Parsing the returned HTML requires careful inspection. Use browser developer tools to identify stable CSS selectors for the title (

#productTitle), author (.author .a-link-normal), and price (.a-price .a-offscreen). - Data Aggregation: This single-page scrape is the foundation. To scale this into a project, you would build a crawler to follow "Customers who bought this item also bought" links or pagination on search result pages. To dive deeper into this topic, you can learn more about how to scrape Amazon product data.

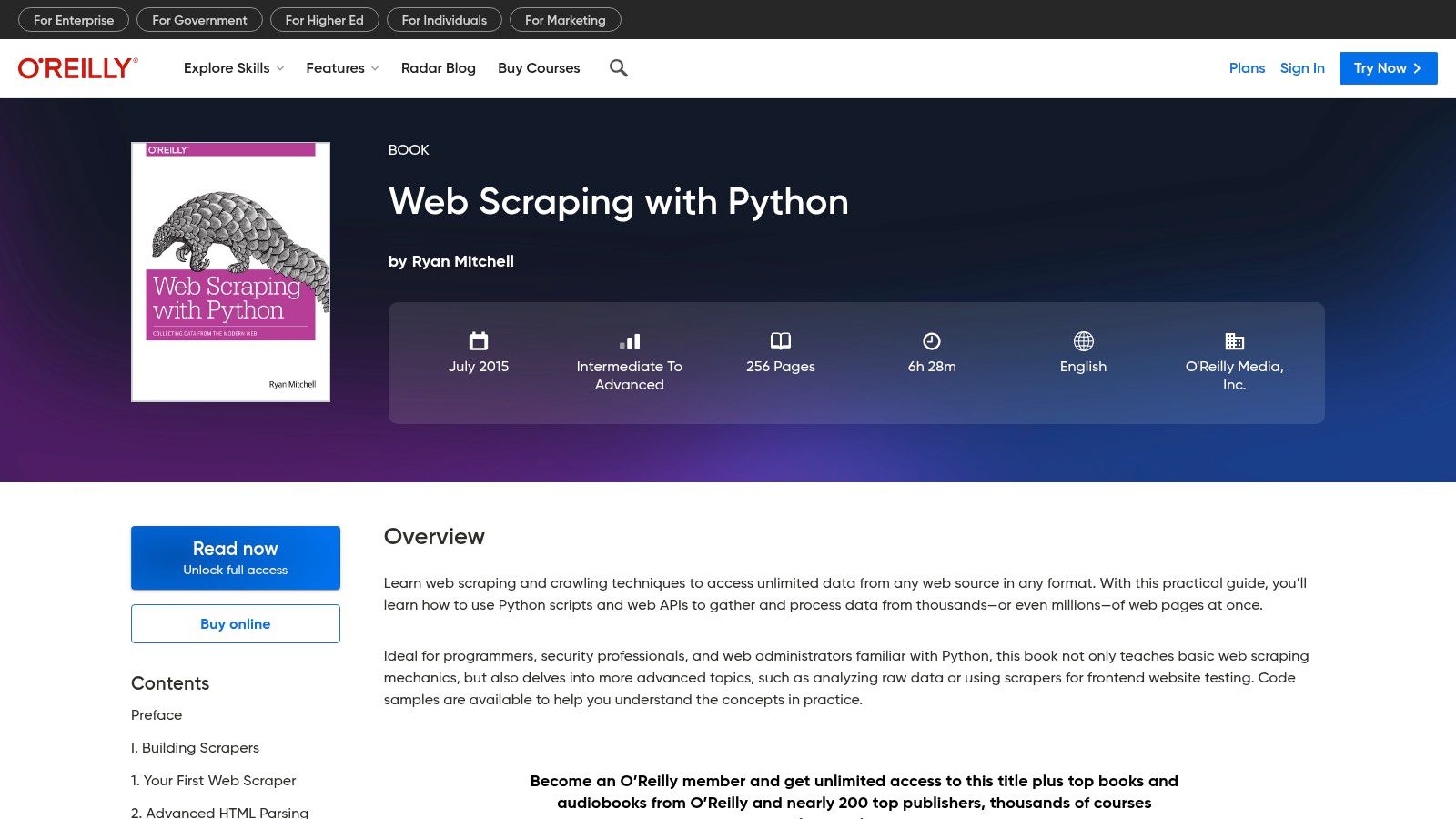

3. O’Reilly Learning

O’Reilly Learning offers a unique target for one of the most practical python web scraping examples: extracting structured data from behind a subscription paywall. Scraping authenticated sessions is a critical skill, and O'Reilly's platform provides a safe, legal environment to practice. This involves managing login credentials, handling cookies, and navigating a well-structured but access-controlled platform to pull information like chapter titles or code snippets from technical books.

This example focuses on extracting the table of contents from the "Web Scraping with Python" book page. Unlike public websites, the key challenge here isn't bypassing aggressive anti-bot measures, but rather maintaining an authenticated state. Successfully automating data extraction from a platform like O'Reilly demonstrates proficiency in handling session-based scraping, a common requirement for enterprise data projects.

Example: Scraping a Book’s Table of Contents with Olostep

Directly accessing a specific book URL on O'Reilly without logging in will redirect you or show a limited preview. To scrape the full content, you need to provide authentication details. A scraping API like Olostep can manage session cookies securely, allowing you to pass login credentials within the API call and access the content as an authenticated user.

import requests

import json

import os

from bs4 import BeautifulSoup

# Securely get credentials and API key from environment variables

API_KEY = os.environ.get("OLOSTEP_API_KEY", "YOUR_OLOSTEP_API_KEY")

OREILLY_EMAIL = os.environ.get("OREILLY_EMAIL", "YOUR_OREILLY_EMAIL")

OREILLY_PASSWORD = os.environ.get("OREILLY_PASSWORD", "YOUR_OREILLY_PASSWORD")

# The O'Reilly book URL to scrape

target_url = "https://www.oreilly.com/library/view/web-scraping-with/9781491910283/"

# Define the payload for the Olostep API, including login steps

payload = {

"url": target_url,

"output_format": "html",

"options": {

"steps": [

{"action": "type", "selector": "#email", "value": OREILLY_EMAIL},

{"action": "type", "selector": "#password", "value": OREILLY_PASSWORD},

{"action": "click", "selector": "button[type='submit']"},

{"action": "waitForNavigation"}

]

}

}

# Set the required headers for the API request

headers = {

"x-olostep-api-key": API_KEY,

"Content-Type": "application/json"

}

try:

# Make the POST request to the Olostep API endpoint

response = requests.post("https://api.olostep.com/v1/scrapes", headers=headers, json=payload, timeout=90)

response.raise_for_status()

# Parse the JSON response

data = response.json()

html_content = data.get("content")

if not html_content:

print("Failed to retrieve HTML content after login.")

else:

# Use BeautifulSoup to parse the HTML and extract the chapter titles.

soup = BeautifulSoup(html_content, 'html.parser')

toc_items = soup.select('.toc-level-1 > a')

print(f"--- Table of Contents for 'Web Scraping with Python' ---")

for i, item in enumerate(toc_items, 1):

print(f"Chapter {i}: {item.get_text(strip=True)}")

except requests.exceptions.HTTPError as err:

print(f"HTTP Error: {err}")

print(f"Response Body: {err.response.text}")

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")Strategic Takeaways

- Handling Authenticated Sessions: The

stepsparameter in the Olostep payload is the key. It automates the login process by typing credentials and clicking the submit button, establishing an authenticated session before the target page is loaded. - Respectful Scraping: Always scrape content you have a legitimate right to access, such as through a paid subscription. Ensure your scraping activities comply with the platform's terms of service and do not disrupt the service for other users.

- Structured Data Extraction: The table of contents on O'Reilly uses consistent HTML tags (

.toc-level-1). This predictable structure makes it an excellent target for practicing hierarchical data extraction after you've successfully retrieved the authenticated page content.

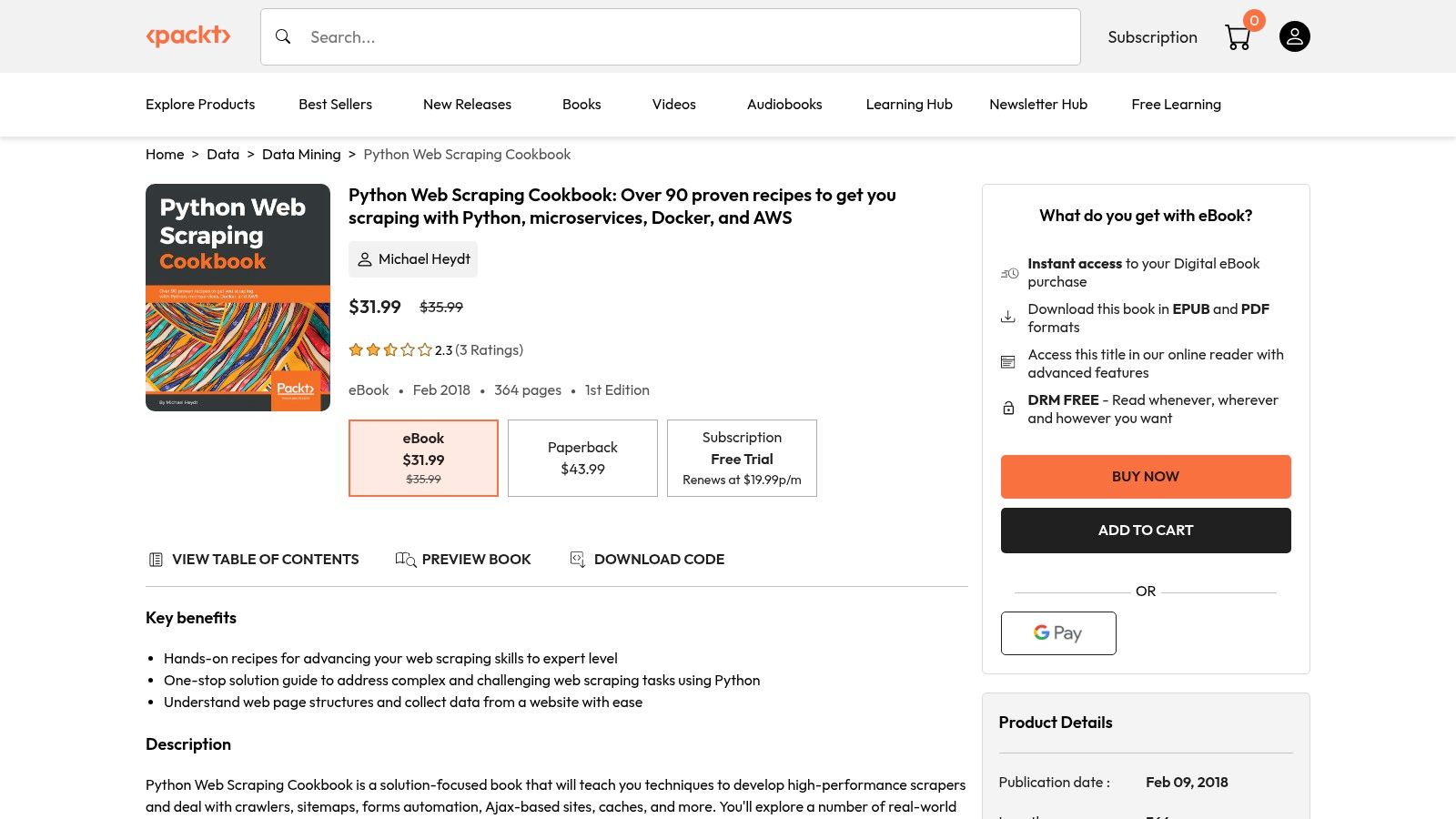

4. Packt

Packt Publishing offers a unique angle for python web scraping examples by providing the source code for entire books dedicated to the subject. Instead of scraping a live website for data, this example focuses on a different but equally important skill: programmatic downloading and organization of digital assets. This process involves navigating a user account, handling authentication, and downloading files, which are crucial skills for automating data acquisition from private portals or subscription services.

This example simulates scraping the product page to extract metadata about a book, such as its title, publication date, and key features. Scraping its product page is a great exercise in extracting structured data from a typical e-commerce layout. The goal is to collect information that could be used to catalog a library of technical books or compare different publications on a specific topic.

Example: Scraping Book Metadata with Olostep

A direct request to Packt might work for public pages, but using a scraping API like Olostep ensures consistent access and circumvents potential IP blocking or user-agent filtering. It simplifies the process by managing the low-level connection details, allowing the developer to focus on parsing the HTML to get the required book information.

import requests

import json

import os

from bs4 import BeautifulSoup

# Set your Olostep API key from environment variables for security

API_KEY = os.environ.get("OLOSTEP_API_KEY", "YOUR_OLOSTEP_API_KEY")

# The Packt product URL to scrape

target_url = "https://www.packtpub.com/en-us/product/python-web-scraping-cookbook-9781787286634"

# Define the payload for the Olostep API

payload = {

"url": target_url,

"output_format": "html"

}

# Set the required headers for the API request

headers = {

"x-olostep-api-key": API_KEY,

"Content-Type": "application/json"

}

try:

# Make the POST request to the Olostep API endpoint

response = requests.post("https://api.olostep.com/v1/scrapes", headers=headers, json=payload, timeout=60)

response.raise_for_status()

# Parse the JSON response

data = response.json()

html_content = data.get("content")

if html_content:

soup = BeautifulSoup(html_content, "html.parser")

# Extract metadata. Selectors may change over time.

title = soup.select_one("h1.product-main__title")

pub_date = soup.select_one(".product-main__descriptor")

features = soup.select(".product-main__key-features li")

print("--- Scraped Packt Book Metadata ---")

print(f"Title: {title.get_text(strip=True) if title else 'Not Found'}")

print(f"Publication Info: {pub_date.get_text(strip=True) if pub_date else 'Not Found'}")

if features:

print("Key Features:")

for feature in features:

print(f"- {feature.get_text(strip=True)}")

else:

print("Could not retrieve HTML content from the response.")

except requests.exceptions.HTTPError as err:

print(f"HTTP Error: {err}")

print(f"Response Body: {err.response.text}")

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")Strategic Takeaways

- Metadata Extraction: This type of scraping is common for building datasets. By parsing the saved HTML, you can extract the book title (from the

h1tag), publication date (e.g., from a<span>with a specific class), and key features (from a<ul>list). - Static Site Parsing: Unlike Amazon, Packt's product pages are largely static. This makes element selection more straightforward and less prone to breaking from JavaScript rendering changes. You can rely on stable CSS selectors found via browser developer tools.

- Workflow Automation: The true value lies in scaling this script. You could build a crawler that starts on a search results page for "web scraping", collects the URL for each book, and then runs this script on each one to build a comprehensive database of available resources.

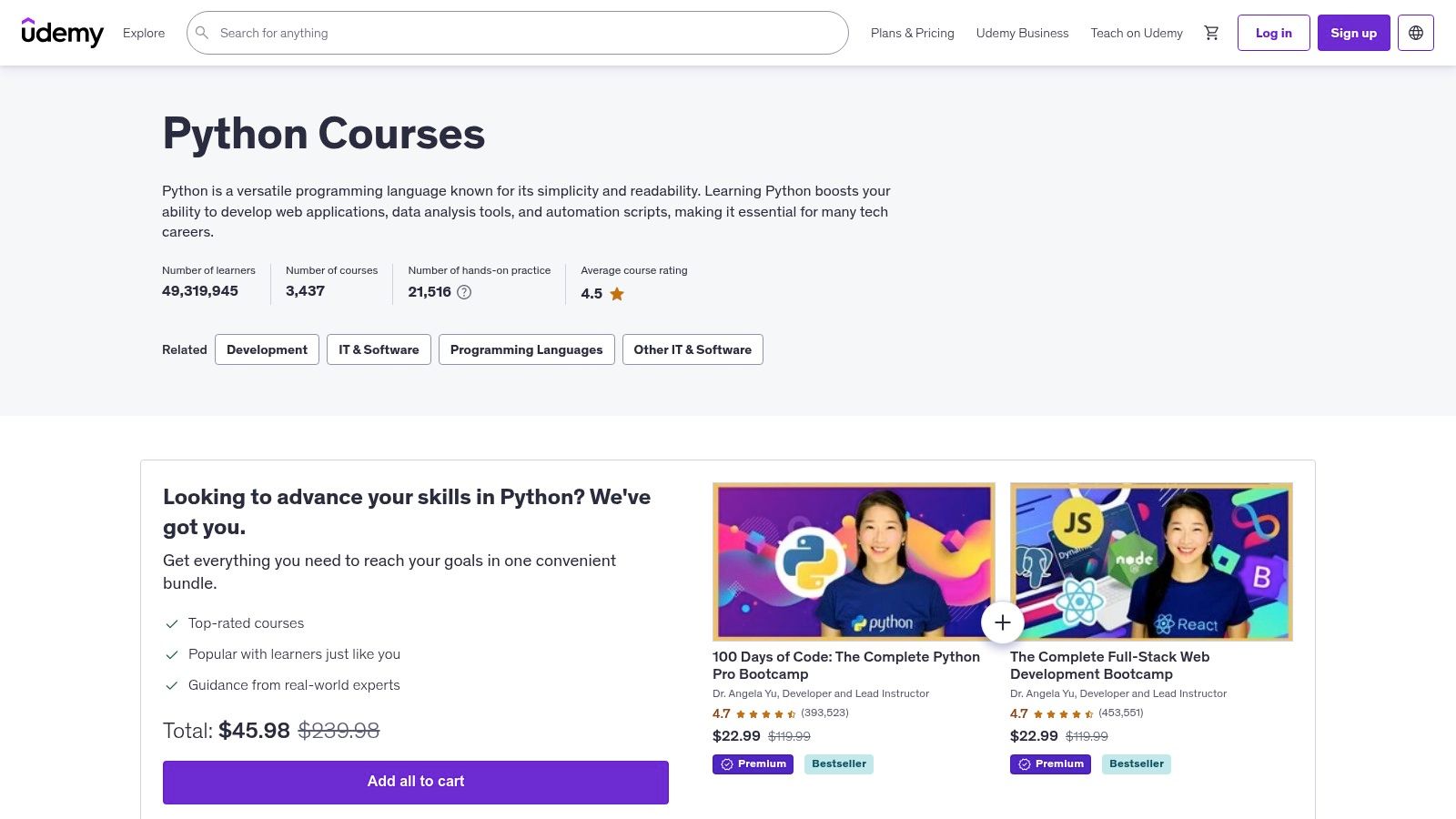

5. Udemy

Udemy is a massive online learning platform that provides a different kind of python web scraping examples: structured, project-based video courses. Instead of scraping the site itself, Udemy offers the educational resources to learn how to scrape other sites effectively. It's a go-to resource for beginners and intermediate developers looking to learn scraping with libraries like BeautifulSoup, Selenium, and Scrapy through guided, hands-on projects.

This example is meta; it focuses on leveraging a platform to acquire scraping skills rather than extracting data from it. Courses on Udemy often include downloadable code samples, practical exercises like scraping e-commerce sites or social media, and lifetime access to content. This makes it an invaluable starting point for building a foundational understanding of web scraping principles.

Example: Finding a Top-Rated Web Scraping Course with Olostep

While you wouldn't typically scrape Udemy for course content, you might scrape its listings to programmatically find the best courses based on specific criteria like ratings, student count, and update frequency. This is useful because Udemy's own search filters can sometimes be limiting. A direct scraping attempt may face bot detection, so using an API like Olostep is a reliable approach.

import requests

import json

import os

from bs4 import BeautifulSoup

# Set your Olostep API key from environment variables for security

API_KEY = os.environ.get("OLOSTEP_API_KEY", "YOUR_OLOSTEP_API_KEY")

# The Udemy search URL for web scraping courses

target_url = "https://www.udemy.com/courses/search/?src=ukw&q=python+web+scraping"

# Define the payload for the Olostep API

payload = {

"url": target_url,

"output_format": "html",

"options": {

"waitFor": 3000 # Wait 3 seconds for course listings to render

}

}

# Set the required headers for the API request

headers = {

"x-olostep-api-key": API_KEY,

"Content-Type": "application/json"

}

try:

# Make the POST request to the Olostep API endpoint

response = requests.post("https://api.olostep.com/v1/scrapes", headers=headers, json=payload, timeout=60)

response.raise_for_status()

# Parse the JSON response

data = response.json()

html_content = data.get("content")

if html_content:

soup = BeautifulSoup(html_content, "html.parser")

# NOTE: Udemy's class names can be complex and auto-generated. This is an example selector.

course_cards = soup.select('div[class*="course-card-module--container--"]')

print("--- Top Udemy Courses for 'Python Web Scraping' ---")

for card in course_cards[:5]: # Display top 5 results

title = card.select_one('h3[class*="course-card-title-module--title--"]')

rating = card.select_one('span[class*="star-rating-module--rating-number--"]')

print(f"Course: {title.get_text(strip=True) if title else 'N/A'}")

print(f"Rating: {rating.get_text(strip=True) if rating else 'N/A'}\n")

else:

print("Could not retrieve HTML content for Udemy search results.")

except requests.exceptions.HTTPError as err:

print(f"HTTP Error: {err}")

print(f"Response Body: {err.response.text}")

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")Strategic Takeaways

- Learning as a Use Case: This platform's primary value is educational. The best strategy is to invest in a highly-rated, recently-updated course to learn the fundamentals before tackling complex targets. Look for courses that cover anti-bot techniques.

- Evaluate Before Buying: Always check the "last updated" date, student reviews, and the instructor's profile. Web technologies change rapidly, and an outdated course may teach deprecated methods. Scraping search results, as shown above, can help automate this evaluation at scale.

- Practical Application: The strength of Udemy is its focus on practical, project-based learning. Follow along with the code examples provided in a course and then try to adapt them to scrape a different website to solidify your understanding. A good course to start with is Web Scraping in Python with BeautifulSoup and Selenium.

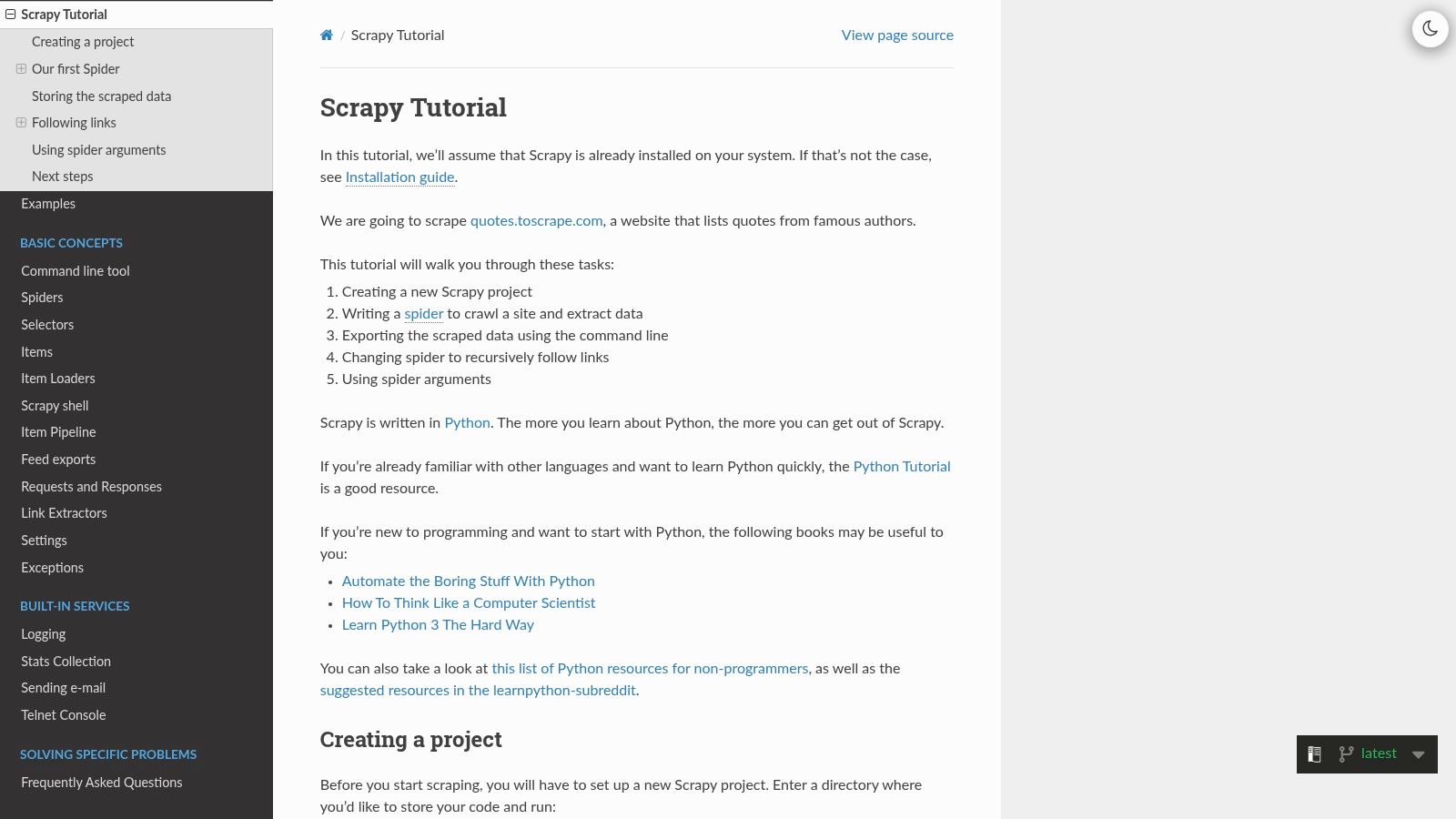

6. Scrapy

Scrapy is not just a library but an entire framework dedicated to web crawling and scraping. It provides all the necessary tools to build robust, production-grade spiders that can handle complex logic, data processing pipelines, and large-scale crawls. Its official documentation site serves as one of the best python web scraping examples because its tutorial is a hands-on project in itself, guiding you from a simple spider to a fully functional data extraction tool.

The tutorial walks you through building a spider to scrape quotes.toscrape.com, a site designed for this exact purpose. Unlike simple scripts, Scrapy introduces concepts like Items for structured data, Pipelines for processing and storing data, and built-in support for exporting to formats like JSON and CSV. This structured approach is what sets it apart, making it the industry standard for serious scraping projects.

Example: Building a Basic Quote Spider with Scrapy

While a professional API handles proxies and JavaScript rendering, Scrapy excels at managing the crawling logic, state, and data processing flow. The following example is adapted from the official Scrapy tutorial and demonstrates how to define a spider to extract quotes and authors.

First, you would set up a Scrapy project (scrapy startproject tutorial) and then define a spider in the spiders directory.

# In tutorial/spiders/quotes_spider.py

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

"https://quotes.toscrape.com/page/1/",

]

def parse(self, response):

"""This function parses the response and extracts scraped data."""

for quote in response.css("div.quote"):

yield {

"text": quote.css("span.text::text").get(),

"author": quote.css("small.author::text").get(),

"tags": quote.css("div.tags a.tag::text").getall(),

}

# Follow the "next" link to the next page

next_page = response.css("li.next a::attr(href)").get()

if next_page is not None:

# Recursively call parse() on the next page

yield response.follow(next_page, callback=self.parse)To run this spider, you navigate to the project directory in your terminal and execute: scrapy crawl quotes -o quotes.json. This command runs the spider and saves the output to a file named quotes.json.

Strategic Takeaways

- Asynchronous by Design: Scrapy is built on Twisted, an asynchronous networking framework. This allows it to handle many requests concurrently, making it significantly faster than sequential scripts for large-scale crawls.

- Data Pipelines: The real power of Scrapy lies in its Item Pipelines. These are components that process the scraped data sequentially, perfect for cleaning HTML, validating data integrity, checking for duplicates, and saving the data to a database.

- Extensibility and Middleware: Scrapy's middleware architecture allows you to customize how requests and responses are handled. This is where you can integrate proxy services, user-agent rotation, and other techniques to avoid being blocked. For a deeper understanding of these techniques, you can explore how to scrape without getting blocked.

7. Real Python

Real Python is a premier educational resource for developers looking to master Python, and its web scraping tutorials are a standout. It serves as a foundational learning environment rather than a target website for scraping. The platform provides some of the most comprehensive python web scraping examples available, walking learners through projects using libraries like BeautifulSoup and requests. This makes it an ideal starting point for developers aiming to build a solid, ethical understanding of data extraction from the ground up.

Unlike a direct scraping target, Real Python's value lies in its high-quality, step-by-step content. The tutorials break down complex concepts, such as parsing HTML with BeautifulSoup and handling different data types, into manageable lessons. This focus on fundamentals is crucial for building robust scrapers that can handle real-world challenges like malformed HTML and complex site structures.

Example: Following a Real Python Tutorial

Since Real Python is an educational platform, the "example" involves implementing the code from one of its tutorials. The code below is a conceptual representation of a common beginner exercise found on the site: scraping quotes from a simple, static website designed for practice. This simulates the learning process a developer would go through on their platform.

import requests

from bs4 import BeautifulSoup

# The URL of the practice website, as featured in many tutorials

target_url = "http://quotes.toscrape.com/"

try:

# Make a simple GET request to the static site

response = requests.get(target_url, headers={'User-Agent': 'My-Cool-Scraper/1.0'})

response.raise_for_status() # Check for HTTP request errors

# Parse the HTML content of the page

soup = BeautifulSoup(response.content, "html.parser")

# Find all quote containers

quotes = soup.find_all("div", class_="quote")

print(f"Found {len(quotes)} quotes on the page.\n")

scraped_data = []

# Loop through each quote container and extract the text and author

for quote in quotes:

text = quote.find("span", class_="text").get_text(strip=True)

author = quote.find("small", class_="author").get_text(strip=True)

scraped_data.append({"text": text, "author": author})

print(f'"{text}" - {author}')

# You could now save scraped_data to a file, e.g., JSON or CSV

print(f"\nSuccessfully scraped {len(scraped_data)} quotes.")

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")Strategic Takeaways

- Building Foundational Skills: Real Python’s strength is in its pedagogy. It teaches you how to think like a scraper developer, starting with inspecting page sources, identifying stable selectors, and handling potential errors gracefully.

- Ethical and Legal Guidance: The platform often includes sections on the ethical and legal considerations of web scraping, such as respecting

robots.txtfiles and understanding a website's terms of service. This is an invaluable lesson for any data professional. - Progressive Learning Path: Tutorials are structured to build upon each other. You start with static sites, then move to more advanced topics like handling pagination, scraping JavaScript-rendered content, and storing data in formats like CSV or databases. Visit their web scraping tutorials to start learning.

Anti-Bot Guidance and Ethical Considerations

When scraping websites, you are an uninvited guest. Acting responsibly is crucial for avoiding blocks and maintaining long-term access.

Anti-Bot Evasion Techniques

- Rate Limiting and Delays: Make requests at a human-like pace. Implement

time.sleep()between requests to avoid overwhelming the server. - Realistic User-Agent Headers: Identify your bot, but mimic a real browser. A header like

{'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'}is less likely to be blocked than the defaultpython-requestsagent. - Proxy Rotation: Use a pool of IP addresses (residential proxies are best) to distribute your requests. Services like ScrapingBee, Bright Data, and Olostep manage this automatically.

- Headless Browsers (When Necessary): For sites heavily reliant on JavaScript, tools like Selenium or Puppeteer (often managed by a scraping API) are needed to render the page fully before scraping.

Ethical and Legal Notes

- Respect

robots.txt: This file at the root of a domain (e.g.,example.com/robots.txt) outlines rules for bots. While not legally binding, respecting it is a standard ethical practice. - Check Terms of Service (ToS): Many sites explicitly forbid scraping in their ToS. Violating them can lead to legal action, although this is rare for small-scale, non-commercial projects.

- Do Not Scrape Personal or Copyrighted Data: Avoid collecting personally identifiable information (PII) or copyrighted content for redistribution without permission.

- Don't Overwhelm Servers: Your scraping activity should not degrade the website's performance for other users. This is the most common reason for getting blocked.

Troubleshooting Common Scraping Failures

- CAPTCHAs: These are designed to stop bots. Solving them requires specialized services (e.g., 2Captcha) or using a high-end scraping API like Olostep that handles them transparently.

- 403 Forbidden / 429 Too Many Requests: Your IP address or user-agent has been flagged. Slow down, rotate your IP address/proxy, and check your headers.

- 5xx Server Errors: The server is having trouble. This could be temporary or because your requests are causing strain. Implement exponential backoff (wait longer after each failed retry) before trying again.

- Timeouts: The server is not responding quickly enough. Increase the

timeoutparameter in yourrequestscall and ensure your network connection is stable. - Empty or Incorrect Data: The content is likely loaded via JavaScript after the initial HTML page load. You need a tool that can render JavaScript, like a headless browser or a scraping API with that capability.

Comparison of Web Scraping API Alternatives

While this guide uses Olostep, several other excellent services can manage the complexities of modern web scraping.

| Service | Key Strengths | Best For | Fair Limitations |

|---|---|---|---|

| Olostep | Structured data output (JSON/Markdown), community parsers, generous free tier. | Developers needing clean, pre-parsed data and high scalability. | Custom parsers require some initial learning. |

| ScrapingBee | Easy-to-use API, screenshot feature, excellent for JavaScript rendering. | Quick projects and developers who want a simple, reliable API without much configuration. | Can become costly at very high volumes compared to some competitors. |

| Bright Data | Massive proxy network (72M+ IPs), comprehensive tool suite (Scraping Browser, Web Unlocker). | Enterprise-level users needing the largest and most diverse proxy infrastructure. | Complex pricing structure and can be overwhelming for beginners. |

| ScraperAPI | Handles proxies, browsers, and CAPTCHAs with a simple API call. | Developers looking for a straightforward, all-in-one solution to avoid getting blocked. | Performance can vary depending on the target site's complexity. |

| Scrapfly | Anti Scraping Protection bypass, Python SDK, session management. | Python developers who want fine-grained control over scraping sessions and browser behavior. | Newer player in the market, community and support resources are still growing. |

Your Web Scraping Checklist and Next Steps

We've explored a diverse set of python web scraping examples, demonstrating how to tackle everything from simple static sites to complex, anti-bot-protected e-commerce platforms like Amazon. The journey through libraries like Scrapy and APIs like Olostep reveals a core truth: successful web scraping is not about finding a single magic tool, but about building a strategic toolkit.

You saw how a dedicated API can abstract away the complexities of CAPTCHAs and rotating proxies, allowing you to focus on data extraction. You also learned how to structure robust, scalable spiders with Scrapy for large-scale projects and how to handle dynamic content efficiently. The key takeaway is that the right approach depends entirely on your project's specific challenges.

Your Project Launch Checklist

Use this checklist to kickstart your next web scraping initiative and ensure you've covered all the essential bases.

- Define Your Goal: What specific data points do you need? (e.g., product prices, reviews, article text).

- Legal and Ethical Review: Have you checked the website's Terms of Service and

robots.txt? - Target Site Analysis: Is the site static or dynamic? What anti-bot technologies are in use?

- Tool Selection: Choose your primary tool (e.g., Olostep API, Scrapy, Selenium) based on the site's complexity.

- Develop the Scraper: Write initial code to fetch and parse a single page correctly.

- Add Resilience Features: Integrate error handling, retries with exponential backoff, and appropriate delays (

time.sleep()). - Scale and Deploy: Plan how you will run the scraper at scale, manage proxies, and store the data.

By methodically working through these steps, you can confidently apply the python web scraping examples from this article to your own unique challenges. The world of web data is vast, and with the right strategy, you now have the keys to unlock its potential.

Ready to skip the headaches of IP blocks and CAPTCHAs? The examples in this article show that a reliable scraping API is often the fastest path to getting the data you need. Sign up for a free Olostep account today to get 500 credits and start building your first robust web scraper in minutes using our simple POST request API.